The Beginning of Parallel Programming

The beginning of parallel programming

Traditional Concurrency Problem

For every programmer who has experienced writing some code that was running in paralism, the followings concurrency techniques would’t be un-familiar.

- The Producer-Consumer Problem (wait - notify)

- The Writer-Reader Problem (mutable shared state)

Both techniques are strapped by the same problem:

Waiting

The Harsh Realities of Parallelization

In computer architecture, Amdahl’s law is a formula which gives the theoretical speedup in latency of the execution of a task at fixed workload that can be expected of a system whose resources are improved.

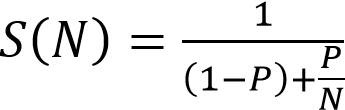

Amdalh’s Law

- S(N): N processors speedup.

- N: N processors.

- P: fraction of jobs can be executed in parallel.

Exmaple.

There’re some 3 jobs:

- A.size = 2

- B.size = 2

- C.size = 1

With 3 processors that can only execute and reduce job’s size in 1 unit in one concurrent execution.

So the P = 3 / 5, N = 3. We can work out that the S(N) = 5 / 3 ≈ 1.67.

The SpeedUp describe how much performance improvements could be brought if we increase the processors. But the result is far below our expect that S(N) should be equal to 3 with 3 processors.

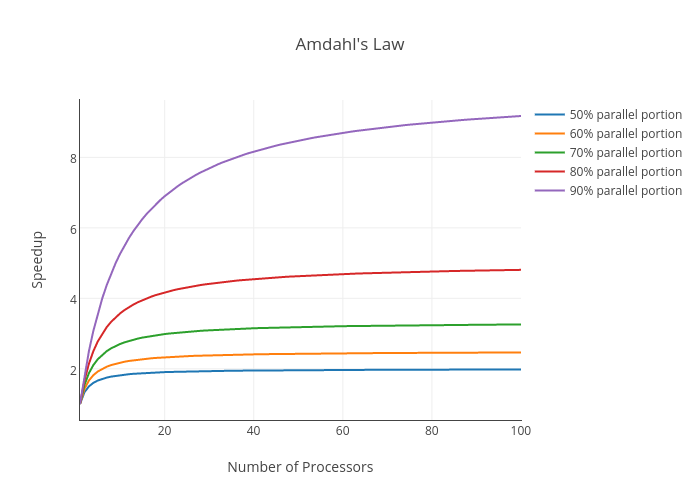

Also there’s a graph that charts the relationship among them:

Then we can conclude:

- Consider hardware cost, add numbers of processors does not always work well. The more processors we added, the less marginal processor’s speedup we can get.

- Increasing parallel jobs portion can effectively improve speedup.